"SALLM: Security Assessment of Generated Code" accepted at ASYDE 2024 (ASE Workshop)

Our paper, “SALLM: Security Assesment of Generated Code”, has been accepted to the 6th International Workshop on Automated and verifiable Software sYstem Development (ASYDE) co-located with Automated Software Engineering conference (ASE 2024).

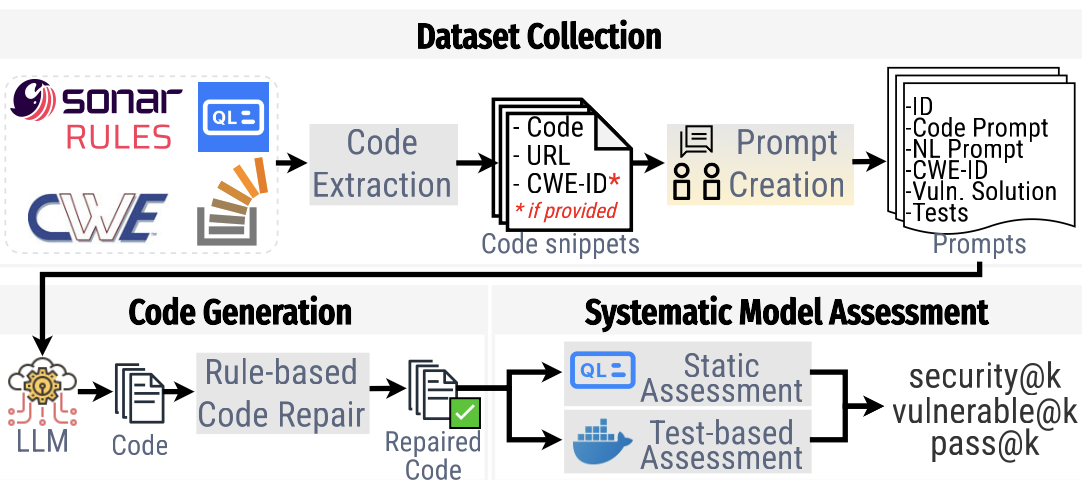

This is the first kind of paper to introduce a framework for automated security evaluation of the generated code using dynamic and static analysis. We have 100 Python prompts with unit tests for functionality and security. We benchmarked several models with the SALLM framework, and found GPT-3.5 balanced both functional and secure code generation.

Related Links

BibTeX

@inproceedings{siddiq2024sallm,

author={Siddiq, Mohammed Latif and Santos, Joanna C. S. and Devareddy, Sajith and Muller, Anna},

title={SALLM: Security Assessment of Generated Code},

booktitle = {Proceedings of the 39th IEEE/ACM International Conference on Automated Software Engineering Workshops (ASEW '24)},

numpages = {12},

location = {Sacramento, CA, USA},

doi = {10.1145/3691621.3694934},

series = {ASEW '24},

year = {2024}

}Subscribe

Subscribe to this blog via RSS.

Categories

Paper 13

Research 13

Tool 2

Llm 10

Dataset 2

Survey 1

Recent Posts

"SALLM: Security Assessment of Generated Code" accepted at ASYDE 2024 (ASE Workshop)

Posted on 07 Sep 2024Popular Tags

Paper (13) Research (13) Tool (2) Llm (10) Dataset (2) Qualitative-analysis (1) Survey (1)