"Using Large Language Models to Generate JUnit Tests: An Empirical Study" accepted at EASE 2024.

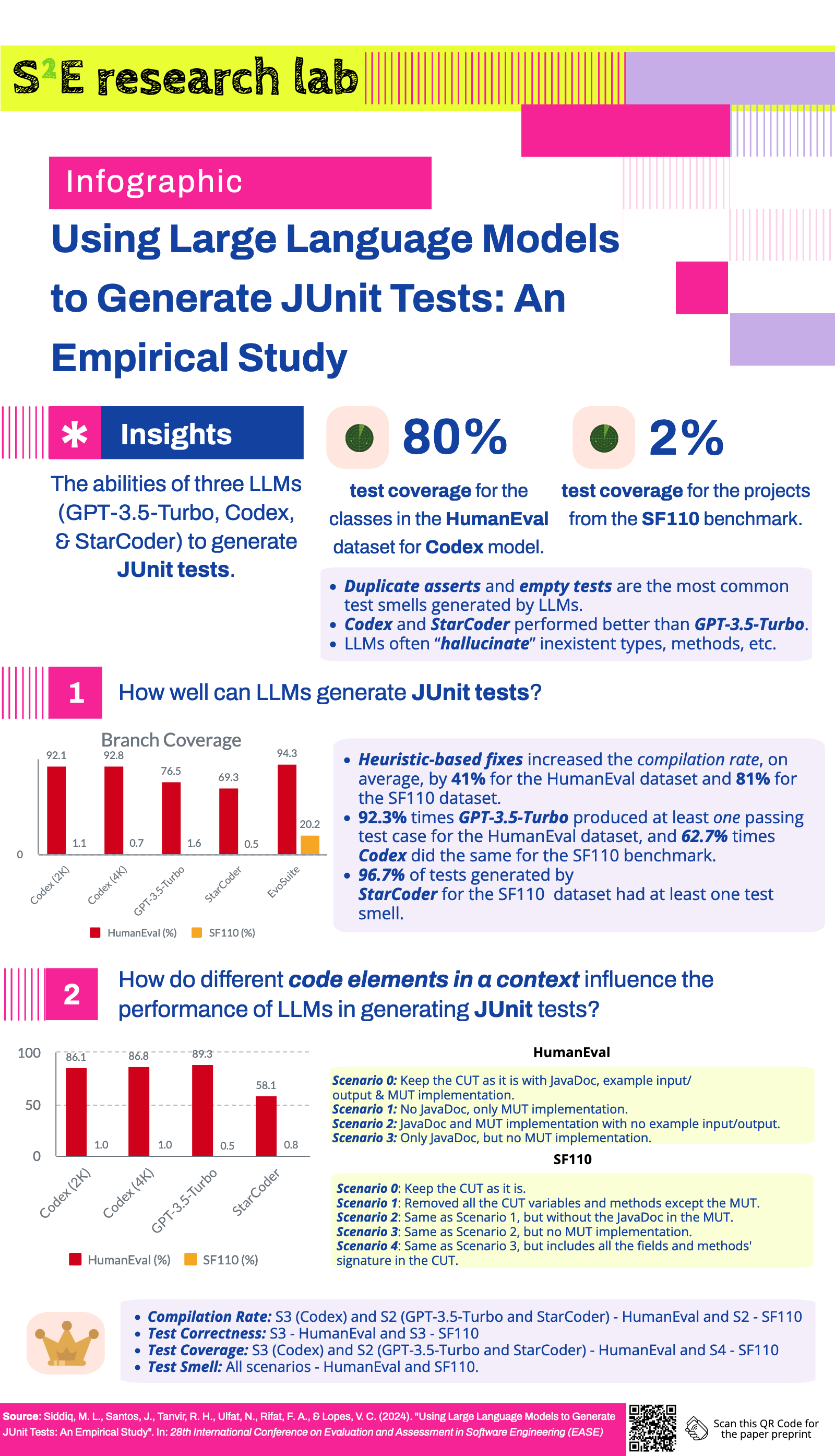

Our paper, Using Large Language Models to Generate JUnit Tests: An Empirical Study, got accepted for the 28th International Conference on Evaluation and Assessment in Software Engineering (EASE 2024) in the research track. In this work, we analyzed three models with different prompting techniques to generate JUnit tests for the HumanEval dataset and real-world software. We evaluated the LLMs’ generated tests using compilation rates, test correctness, test coverage, and test smell. We found that though the models have higher coverage for small programming problems from the HumanEval dataset, they lack good coverage for real-world software from the Evosuite dataset.

Infographic

Related Links

BibTeX

@inproceedings{siddiq2024junit,

author={Siddiq, Mohammed Latif and Santos, Joanna C. S. and Tanvir, Ridwanul Hasan and Ulfat, Noshin and Rifat, Fahmid Al and Lopes, Vinicius Carvalho},

title={Using Large Language Models to Generate JUnit Tests: An Empirical Study},

booktitle = {Proceedings of the 28th International Conference on Evaluation and Assessment in Software Engineering},

pages = {313–322},

numpages = {10},

keywords = {junit, large language models, test generation, test smells, unit testing},

doi = {10.1145/3661167.3661216}

location = {Salerno, Italy},

series = {EASE '24}

}Subscribe

Subscribe to this blog via RSS.

Categories

Paper 13

Research 13

Tool 2

Llm 10

Dataset 2

Survey 1

Recent Posts

"SALLM: Security Assessment of Generated Code" accepted at ASYDE 2024 (ASE Workshop)

Posted on 07 Sep 2024Popular Tags

Paper (13) Research (13) Tool (2) Llm (10) Dataset (2) Qualitative-analysis (1) Survey (1)