"An Empirical Study of Code Smells in Transformer-based Code Generation Techniques" accepted at SCAM 2022

Our paper, “An Empirical Study of Code Smells in Transformer-based Code Generation Techniques”, got accepted for the 22nd IEEE International Working Conference on Source Code Analysis and Manipulation (SCAM 2022) in the research track.

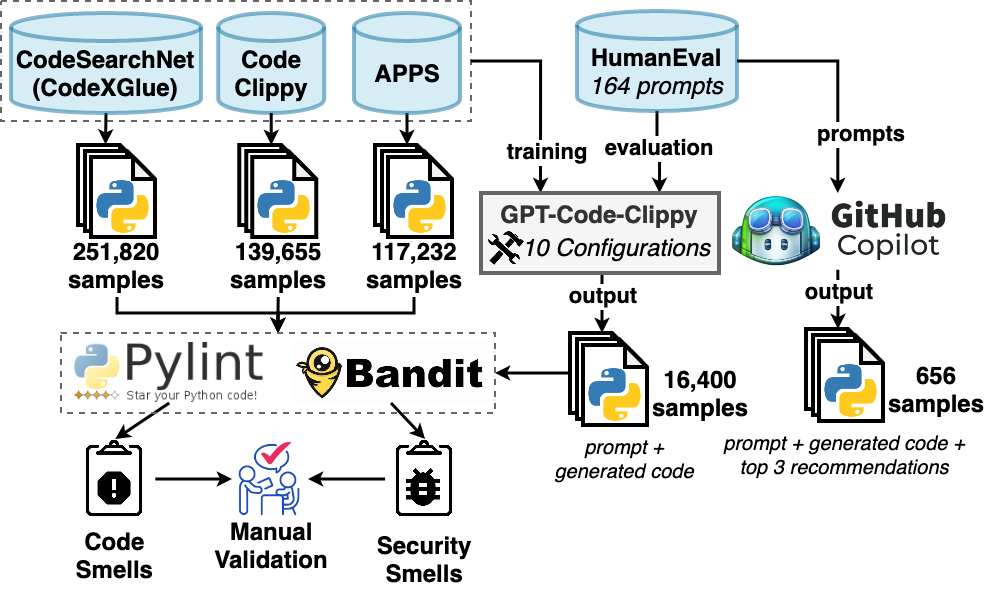

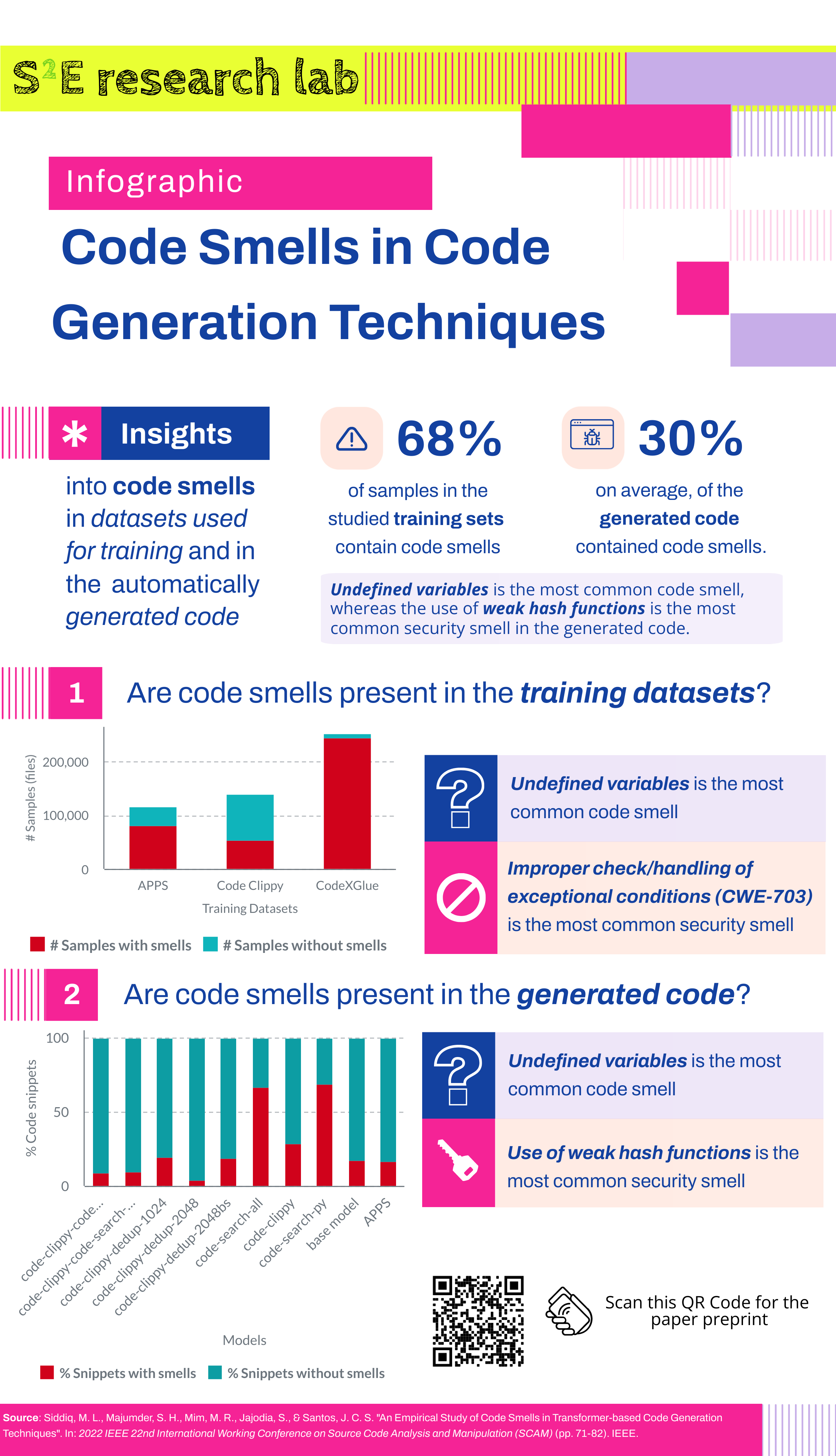

We carry out a thorough empirical analysis of code smells in Python transformer-based code generation models’ training sets and look at how these bad patterns get up in the output. In order to carry out this study, we obtained three open-source datasets (CodeXGlue, APPS, and Code Clippy) that are frequently used to train Python code generation techniques and checked to see how much code smells were present in them. We also looked into the possibility of code smells in the code produced by transformer-based models. For this experiment, we calculated the code smells in the outputs produced by the open-source and closed-source code generating tools GPT-Code-Clippy and GitHub Copilot, respectively.

This paper makes three contributions:

- a comprehensive empirical analysis of code smell occurrence in the dataset and the results of transformer-based Python code generation methods

- an examination of the potential differences between transformer-based open-source and closed-source methodologies

- a discussion of the implications of the results for researchers and practitioners.

Pre-print: SCAM 2022 Source Code: GitHub

Infographic

Related Links

BibTeX

@inproceedings{siddiq2022empirical,

author={Siddiq, Mohammed Latif and Majumder, Shafayat H. and Mim, Maisha R. and Jajodia, Sourov and Santos, Joanna C. S.},

booktitle={2022 IEEE 22nd International Working Conference on Source Code Analysis and Manipulation (SCAM)},

title={An Empirical Study of Code Smells in Transformer-based Code Generation Techniques},

year={2022},

volume={},

number={},

pages={71-82},

doi={10.1109/SCAM55253.2022.00014}

}Subscribe

Subscribe to this blog via RSS.

Categories

Paper 13

Research 13

Tool 2

Llm 10

Dataset 2

Survey 1

Recent Posts

"SALLM: Security Assessment of Generated Code" accepted at ASYDE 2024 (ASE Workshop)

Posted on 07 Sep 2024Popular Tags

Paper (13) Research (13) Tool (2) Llm (10) Dataset (2) Qualitative-analysis (1) Survey (1)