"Re(gEx|DoS)Eval: Evaluating Generated Regular Expressions and their Proneness to DoS Attacks" accepted at ICSE-NIER 2024.

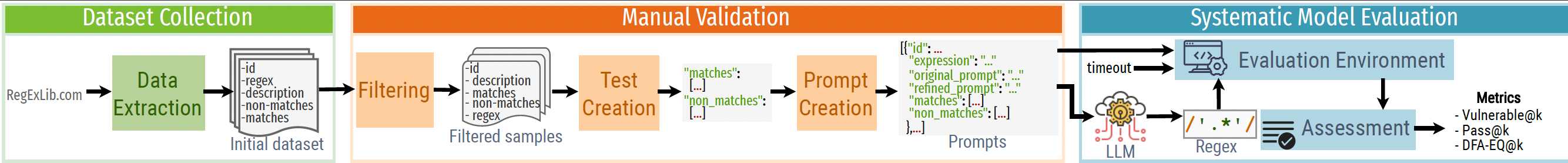

Our paper, “Re(gEx|DoS)Eval: Evaluating Generated Regular Expressions and their Proneness to DoS Attack, got accepted for the 46th International Conference on Software Engineering - New Ideas and Emerging Results Track. In this work, we presented a novel dataset and framework to evaluate LLM-generated RegEx and ReDoS vulnerability.

The Re(gEx|DoS)Eval framework includes a dataset of 762 regex descriptions (prompts) from real users, refined prompts with examples, and a robust set of tests. We introduce the pass@k and vulnerable@k metrics to evaluate the generated regexes based on the functional correctness and proneness of ReDoS attacks. Moreover, we demonstrate the Re(gEx|DoS)Eval with three large language model families, i.e., T5, Phi, and GPT-3, and describe the plan for the future extension of this framework.

Related Links

BibTeX

@inproceedings{siddiq2024regexeval,

author={Siddiq, Mohammed Latif and Zhang, Jiahao and Roney, Lindsay and Santos, Joanna C. S.},

booktitle={Proceedings of the 46th International Conference on Software Engineering, NIER Track (ICSE-NIER '24)},

title={Re(gEx|DoS)Eval: Evaluating Generated Regular Expressions and their Proneness to DoS Attacks},

year={2024},

doi = {10.1145/3639476.3639757}

}Subscribe

Subscribe to this blog via RSS.

Categories

Paper 13

Research 13

Tool 2

Llm 10

Dataset 2

Survey 1

Recent Posts

"SALLM: Security Assessment of Generated Code" accepted at ASYDE 2024 (ASE Workshop)

Posted on 07 Sep 2024Popular Tags

Paper (13) Research (13) Tool (2) Llm (10) Dataset (2) Qualitative-analysis (1) Survey (1)